Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) are a type of artificial neural network that is designed to handle sequential data. They are widely used in various applications such as natural language processing, speech recognition, and time series analysis. RNNs are unique in that they have loops within their architecture, allowing them to retain information over time.

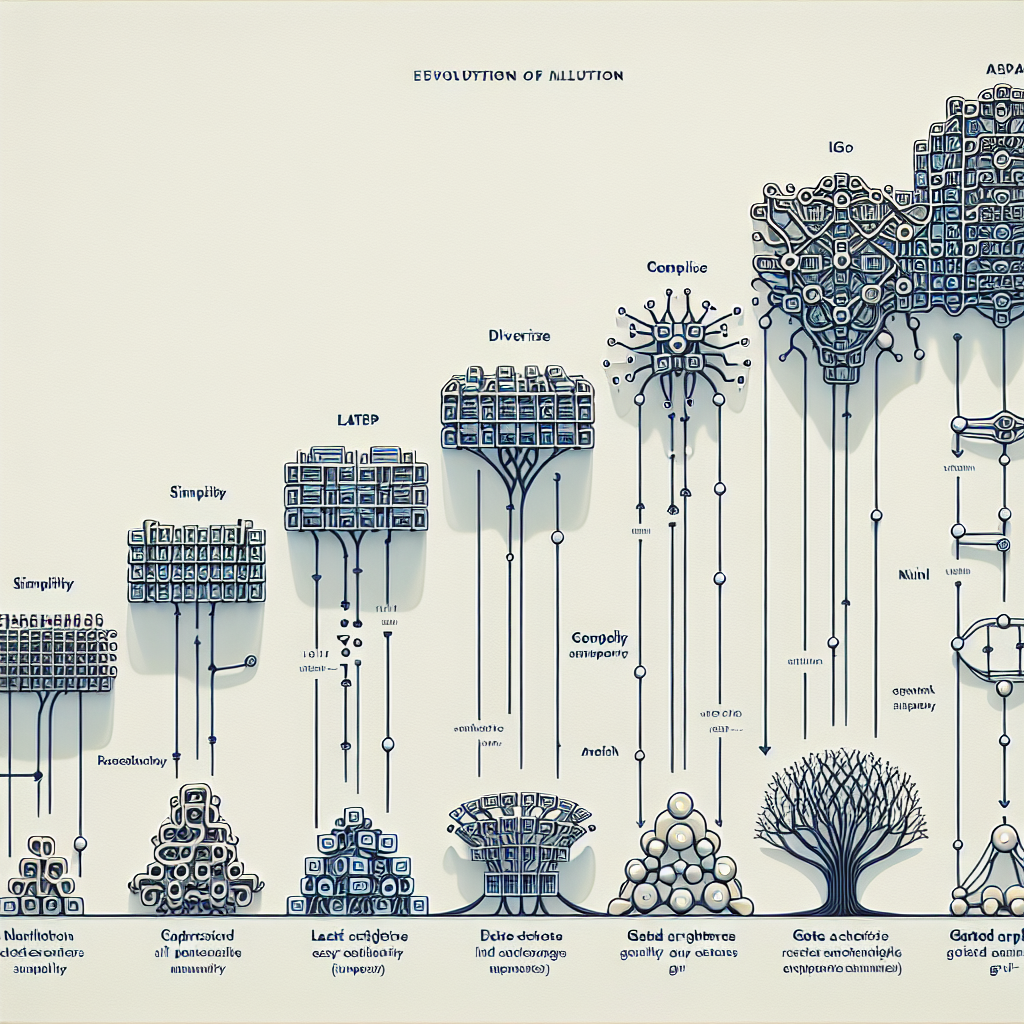

The first and simplest form of RNN is the Vanilla RNN, which was introduced in the 1980s. Vanilla RNNs have a single layer of recurrent units that process input sequences one element at a time. However, Vanilla RNNs suffer from the vanishing gradient problem, where gradients become exponentially small as they are backpropagated through time. This makes it difficult for Vanilla RNNs to learn long-term dependencies in sequential data.

To address this issue, researchers have developed more sophisticated RNN architectures with gating mechanisms that allow them to better capture long-term dependencies. One of the most popular gated RNN architectures is the Long Short-Term Memory (LSTM) network, which was introduced in 1997 by Hochreiter and Schmidhuber. LSTMs have a more complex architecture with three gating mechanisms – input, forget, and output gates – that control the flow of information through the network. This enables LSTMs to learn long-term dependencies more effectively than Vanilla RNNs.

Another popular gated RNN architecture is the Gated Recurrent Unit (GRU), which was introduced in 2014 by Cho et al. GRUs have a simpler architecture than LSTMs, with only two gating mechanisms – reset and update gates. Despite their simpler architecture, GRUs have been shown to perform comparably to LSTMs in many tasks.

In recent years, there have been further advancements in RNN architectures, such as the Transformer model, which uses self-attention mechanisms to capture long-range dependencies in sequential data. Transformers have achieved state-of-the-art performance in various natural language processing tasks.

Overall, the evolution of RNN architectures from Vanilla RNNs to gated architectures like LSTMs and GRUs has greatly improved their ability to handle sequential data. These advancements have enabled RNNs to achieve impressive results in a wide range of applications, and will continue to drive innovation in the field of deep learning.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Evolution #Recurrent #Neural #Networks #Vanilla #RNNs #Gated #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.