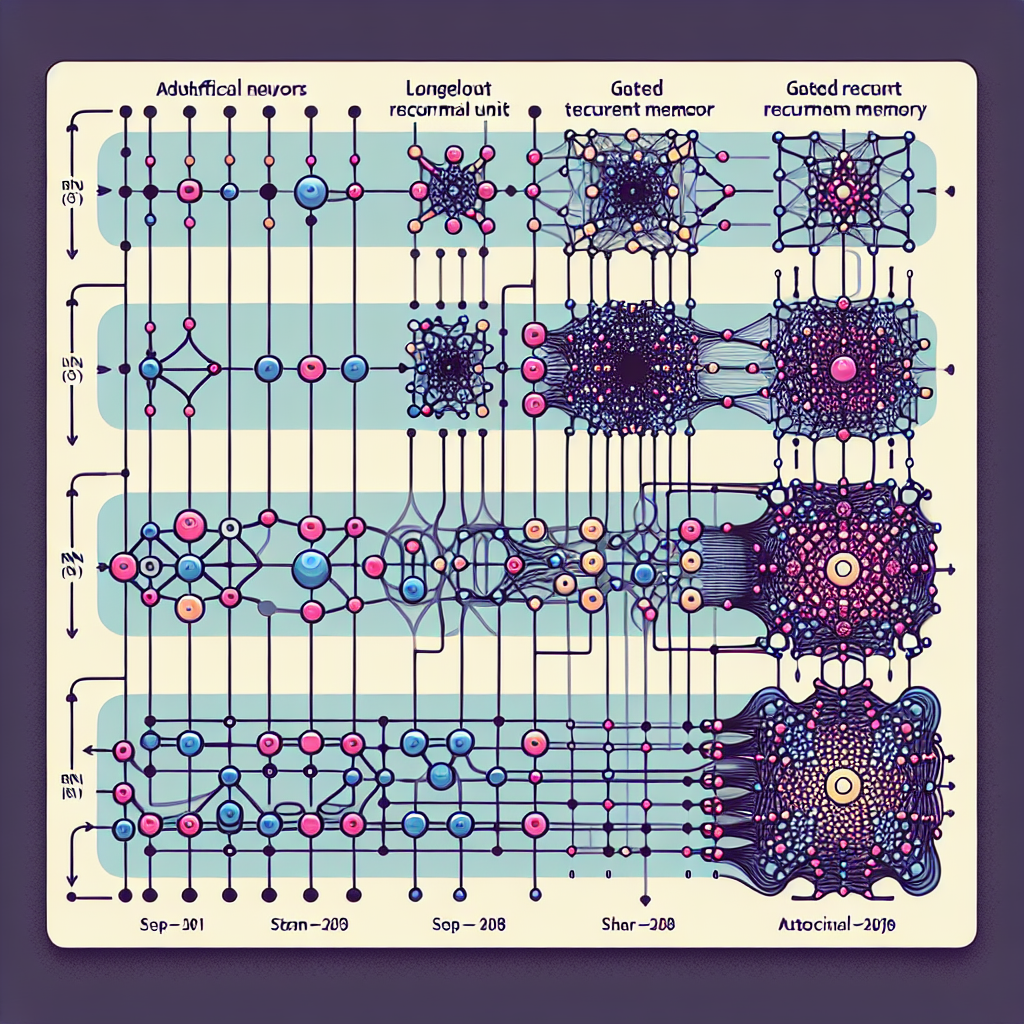

Recurrent Neural Networks (RNNs) have become a popular choice for sequence modeling tasks such as natural language processing, speech recognition, and time series analysis. The architecture of RNNs has evolved significantly over the years, from simple recurrent units to more complex gated architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU).

The simplest form of RNNs consists of a single recurrent unit that takes an input at each time step and produces an output and a hidden state that is passed on to the next time step. While this architecture is effective for capturing short-term dependencies in sequences, it suffers from the vanishing gradient problem, where gradients can become very small and cause the model to forget long-term dependencies.

To address this issue, researchers introduced gated architectures that allow RNNs to selectively update and forget information over time. The LSTM architecture, proposed by Hochreiter and Schmidhuber in 1997, includes three gates – input, output, and forget gates – that control the flow of information in the network. This allows LSTM to maintain long-term dependencies and capture complex patterns in sequences.

Another popular gated architecture is the GRU, proposed by Cho et al. in 2014. The GRU simplifies the LSTM architecture by combining the input and forget gates into a single update gate, making it more computationally efficient while still being able to capture long-term dependencies in sequences.

These gated architectures have significantly improved the performance of RNNs on various sequence modeling tasks. They have been successfully applied to machine translation, sentiment analysis, and speech recognition, among others. Researchers continue to explore new variations of gated architectures to further improve the capabilities of RNNs.

Overall, the evolution of RNN architectures from simple recurrent units to gated architectures has been a significant advancement in the field of deep learning. These architectures have enabled RNNs to capture long-term dependencies in sequences and achieve state-of-the-art performance on a wide range of tasks. As researchers continue to push the boundaries of RNN architectures, we can expect further innovations and improvements in the field of sequence modeling.

#Evolution #RNN #Architectures #Journey #Simple #Gated,recurrent neural networks: from simple to gated architectures

Leave a Reply