Your cart is currently empty!

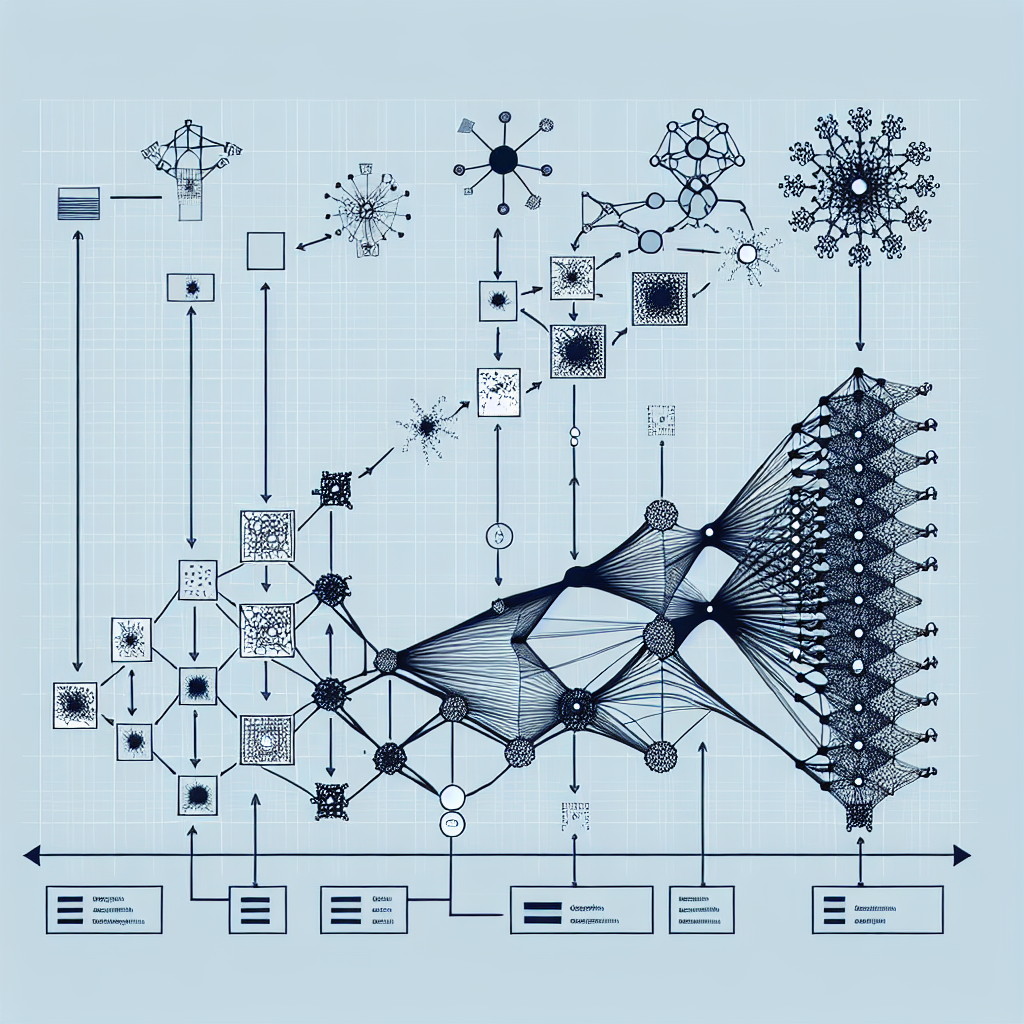

The Evolution of RNNs: From Basic Concepts to Advanced Applications

Recurrent Neural Networks (RNNs) have come a long way since their inception in the late 1980s. Originally designed as a way to model sequential data, RNNs have evolved to become a powerful tool for a wide range of applications, from natural language processing to time series analysis.

The basic concept behind RNNs is simple: they are neural networks that have connections feeding back into themselves. This allows them to maintain a memory of previous inputs, making them well-suited for tasks that involve sequences of data. The ability to learn from past inputs and make predictions about future inputs is what sets RNNs apart from other types of neural networks.

Early RNNs were limited by the problem of vanishing gradients, which made it difficult for them to learn long-range dependencies in sequences. However, research in the early 2010s led to the development of more advanced RNN architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), which address this issue by allowing the network to selectively update its memory.

These advancements in RNN architecture have led to a surge in the use of RNNs for a wide range of applications. In natural language processing, RNNs have been used for tasks such as language modeling, machine translation, and sentiment analysis. In time series analysis, RNNs have been used for tasks such as forecasting stock prices and detecting anomalies in sensor data.

One of the key advantages of RNNs is their ability to handle variable-length sequences of data. This makes them well-suited for tasks that involve processing text, audio, or video data, where the length of the input can vary from one example to the next.

In recent years, researchers have continued to push the boundaries of what RNNs can achieve. For example, in the field of image captioning, researchers have combined RNNs with convolutional neural networks (CNNs) to create models that can generate descriptions of images. In the field of reinforcement learning, researchers have used RNNs to build models that can learn to play video games or control robotic systems.

Overall, the evolution of RNNs from basic concepts to advanced applications has been driven by a combination of theoretical advances and practical innovations. As researchers continue to explore the capabilities of RNNs, we can expect to see even more exciting applications in the future.

#Evolution #RNNs #Basic #Concepts #Advanced #Applications,rnn

Leave a Reply