Your cart is currently empty!

The Evolution of RNNs: From Concept to Cutting-Edge Technology

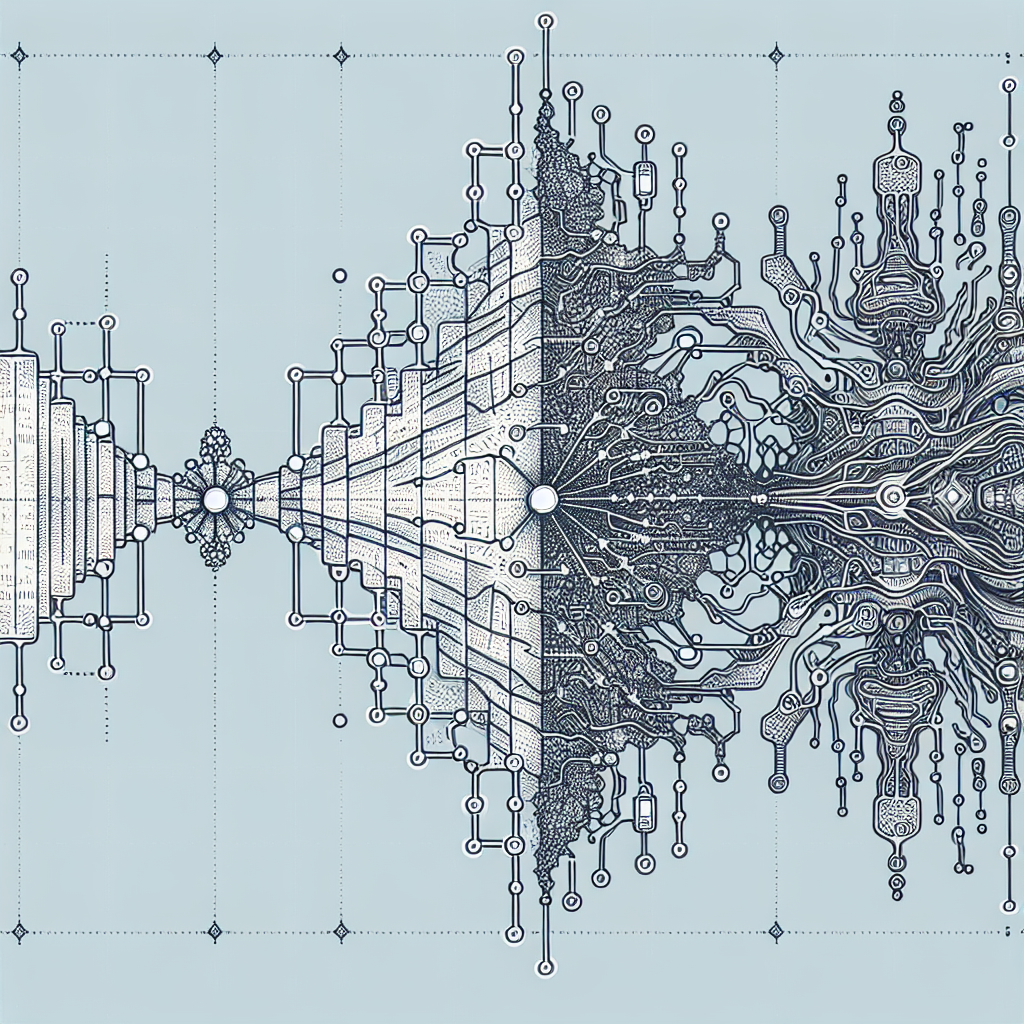

Recurrent Neural Networks (RNNs) have come a long way since their inception in the 1980s. Originally designed to tackle sequential data, RNNs have evolved into a powerful tool for a wide range of applications, from natural language processing to image recognition. In this article, we will explore the evolution of RNNs from concept to cutting-edge technology.

The concept of RNNs was first introduced by Paul Werbos in the 1980s, but it wasn’t until the early 2000s that they gained popularity in the machine learning community. The key idea behind RNNs is their ability to process sequential data by maintaining a memory of past inputs. This makes them particularly well-suited for tasks such as speech recognition, time series prediction, and language modeling.

One of the early challenges with RNNs was the problem of vanishing gradients, where the gradients used to update the network’s parameters would become very small, making it difficult for the model to learn long-range dependencies. This led to the development of more sophisticated RNN architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, which are able to better capture long-term dependencies in sequential data.

In recent years, RNNs have seen a resurgence in popularity thanks to advances in deep learning and the availability of large-scale datasets. Researchers have been able to push the boundaries of what RNNs can achieve, using them for tasks such as machine translation, sentiment analysis, and even generating realistic text and images.

One of the key developments in the evolution of RNNs has been the integration of attention mechanisms, which allow the model to focus on different parts of the input sequence when making predictions. This has led to significant improvements in performance for tasks such as machine translation, where the model needs to consider the entire input sequence in order to generate accurate translations.

Another important advancement in RNNs has been the development of techniques such as transfer learning and meta-learning, which allow models to leverage knowledge from one task to improve performance on another. This has enabled researchers to train RNNs on smaller datasets and still achieve state-of-the-art results in a wide range of applications.

Looking ahead, the future of RNNs looks promising, with ongoing research focusing on improving their ability to handle long-range dependencies, better capture context in sequential data, and scale to even larger datasets. With the continued advancement of deep learning techniques and the increasing availability of computational resources, RNNs are poised to remain at the forefront of cutting-edge technology for years to come.

#Evolution #RNNs #Concept #CuttingEdge #Technology,rnn

Leave a Reply