Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

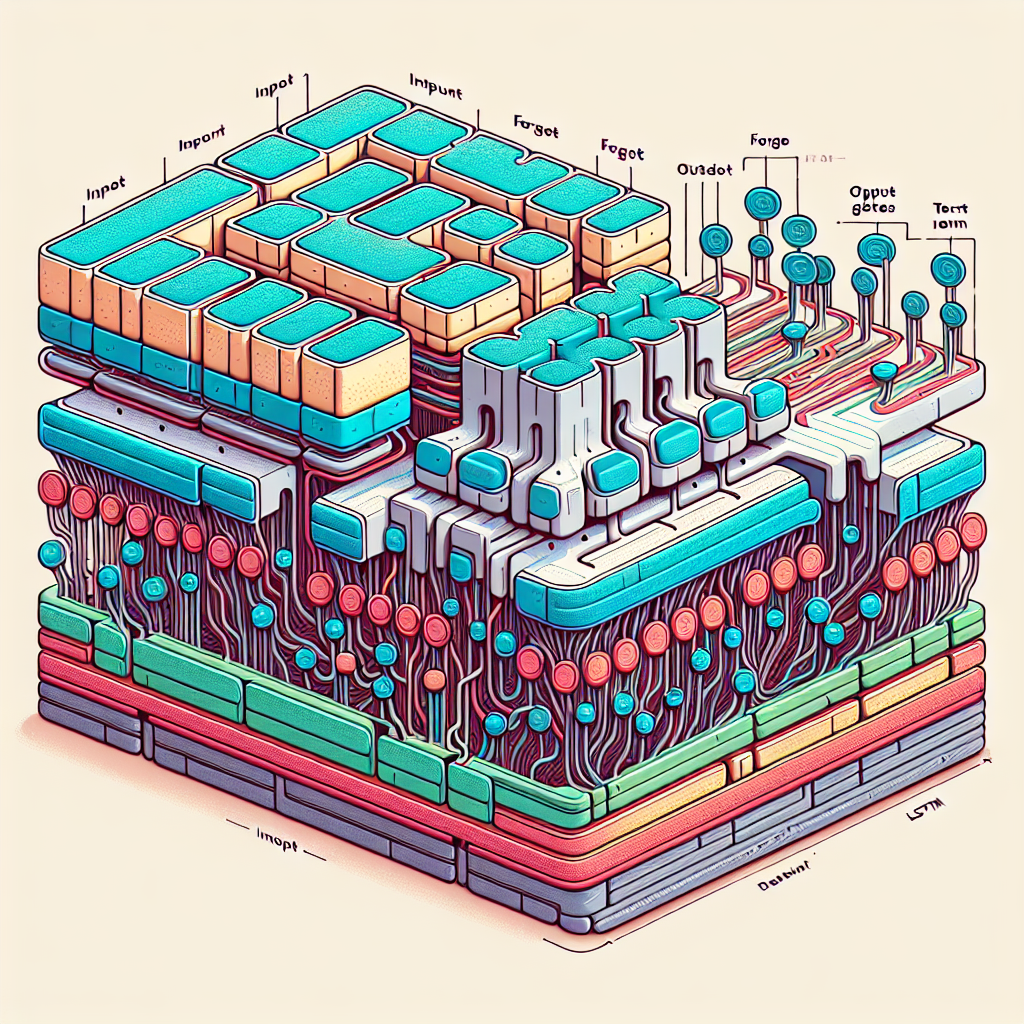

Recurrent Neural Networks (RNNs) have revolutionized the field of machine learning by allowing models to remember past information and use it to make predictions. One of the most popular types of RNNs is the Long Short-Term Memory (LSTM) network, which is designed to address the vanishing gradient problem that plagues traditional RNNs.

LSTM networks are composed of cells that have the ability to store and update information over time. Each cell has three key components: an input gate, a forget gate, and an output gate. These gates control the flow of information into and out of the cell, allowing the network to selectively remember or forget information as needed.

The input gate determines how much of the new input information should be stored in the cell. This gate is controlled by a sigmoid activation function, which outputs values between 0 and 1. A value of 1 indicates that all of the new input information should be stored, while a value of 0 indicates that none of the information should be stored.

The forget gate controls how much of the previous cell state should be retained. Like the input gate, the forget gate is also controlled by a sigmoid activation function. A value of 1 indicates that all of the previous cell state should be retained, while a value of 0 indicates that none of the state should be retained.

Finally, the output gate determines how much of the cell state should be passed to the next time step. This gate is controlled by a tanh activation function, which squashes the values between -1 and 1. The output gate also uses a sigmoid activation function to determine which parts of the cell state should be passed on.

By using these gates, LSTM networks are able to learn long-term dependencies in data, making them well-suited for tasks such as language modeling, speech recognition, and machine translation. In addition to the three main gates, LSTM networks can also have peephole connections, which allow the gates to directly access the cell state.

Overall, LSTM networks are a powerful tool for handling sequential data and learning long-term dependencies. By understanding the inner workings of these networks, researchers and practitioners can better utilize them for a wide range of applications in machine learning and artificial intelligence.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Workings #LSTM #Networks #Deep #Dive #Recurrent #Neural #Networks,lstm

Leave a Reply

You must be logged in to post a comment.