Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

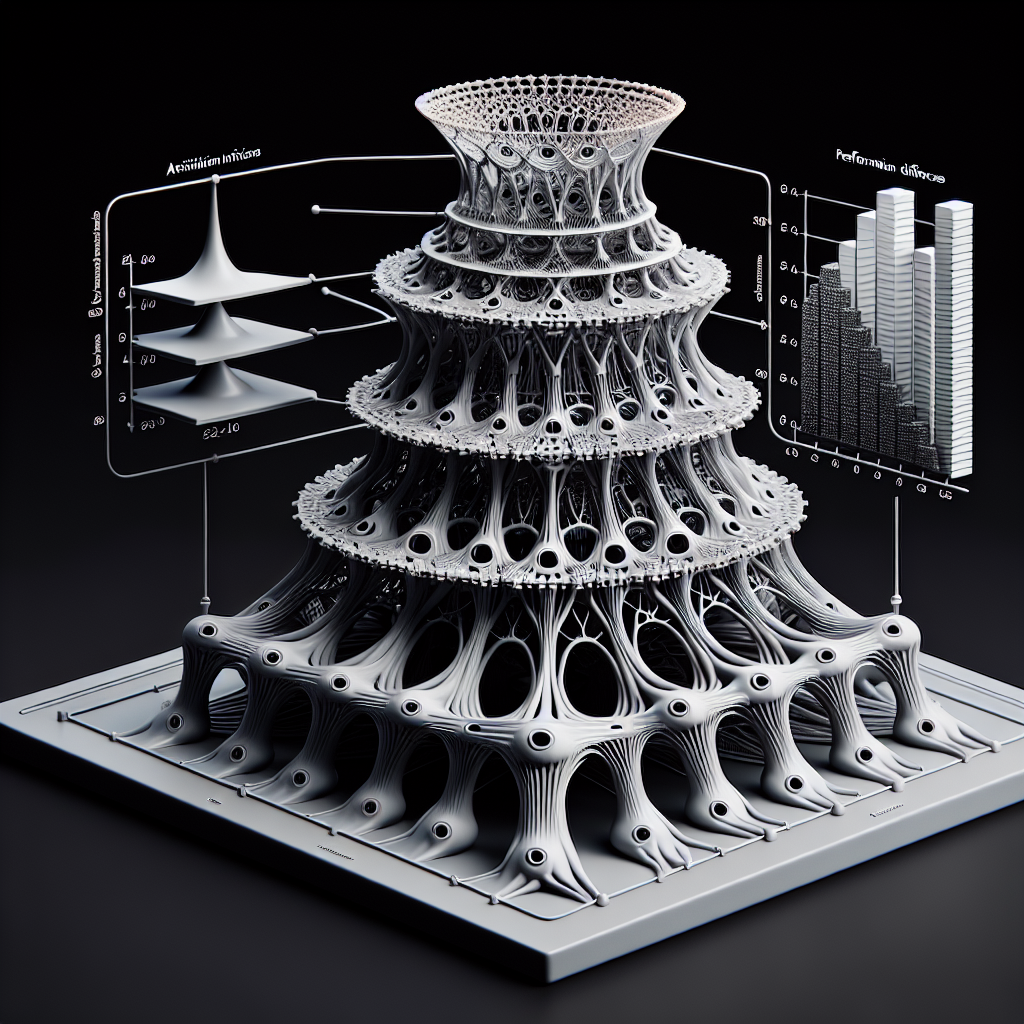

Recurrent Neural Networks (RNNs) have gained popularity in recent years for their ability to model sequential data and make predictions based on past information. One key component of RNNs that plays a crucial role in their performance is the activation function. Activation functions are mathematical functions that determine the output of a neural network node, based on the input it receives.

In RNNs, activation functions are used to introduce non-linearity into the network, allowing it to learn complex patterns in sequential data. There are several activation functions commonly used in RNNs, including sigmoid, tanh, ReLU, and LSTM.

To understand the role of activation functions in RNNs, a comparative analysis can be conducted to evaluate their performance on a specific task. In this analysis, we can compare the performance of different activation functions on a text generation task, where the RNN is trained on a large corpus of text and then used to generate new text.

First, let’s consider the sigmoid activation function, which is commonly used in RNNs due to its smooth gradient and ability to squash values between 0 and 1. However, the sigmoid function suffers from the problem of vanishing gradients, where gradients become very small as the input values move away from the origin. This can lead to slow convergence during training and difficulties in capturing long-term dependencies in the data.

Next, let’s look at the tanh activation function, which is similar to the sigmoid function but squashes values between -1 and 1. The tanh function also suffers from the vanishing gradient problem, but to a lesser extent than the sigmoid function. This makes tanh a better choice for RNNs compared to sigmoid, as it can capture more complex patterns in the data.

Moving on to the ReLU activation function, which has gained popularity in recent years due to its simplicity and effectiveness in training deep neural networks. ReLU is known for its fast convergence and ability to avoid the vanishing gradient problem. However, ReLU can also suffer from the problem of exploding gradients, where gradients become very large and cause the network to diverge during training.

Lastly, let’s consider the LSTM (Long Short-Term Memory) activation function, which is specifically designed for RNNs to capture long-term dependencies in sequential data. LSTM uses a combination of sigmoid and tanh functions, along with gating mechanisms, to control the flow of information through the network. This allows LSTM to effectively model complex sequences and outperform other activation functions in tasks requiring long-term memory.

In conclusion, the choice of activation function plays a critical role in the performance of RNNs. While each activation function has its advantages and disadvantages, a comparative analysis can help determine the best activation function for a specific task. In the case of text generation, LSTM stands out as the most effective activation function due to its ability to capture long-term dependencies in sequential data. By understanding the role of activation functions in RNNs, researchers and practitioners can optimize their networks for better performance on a wide range of tasks.

#Role #Activation #Functions #Recurrent #Neural #Networks #Comparative #Analysis,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.