Recurrent Neural Networks (RNNs) have been widely used in various applications such as speech recognition, language modeling, and machine translation. One of the key challenges in training RNNs is handling long-term dependencies, where the network needs to remember information from earlier time steps to make accurate predictions.

Gated architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), have been introduced to address this issue by incorporating gating mechanisms that control the flow of information in the network. These architectures have shown significant improvements in capturing long-term dependencies compared to traditional RNNs.

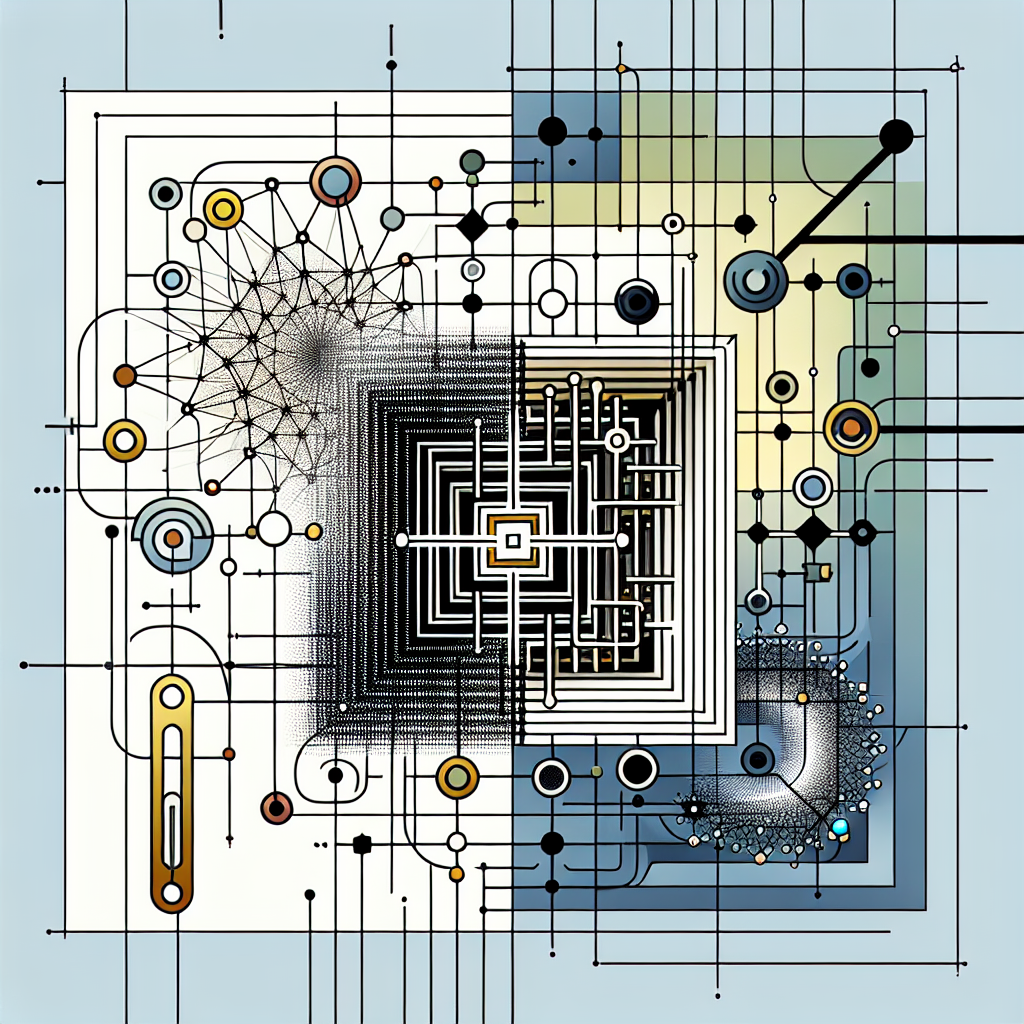

The role of gated architectures in improving long-term dependencies lies in their ability to selectively update and forget information at each time step. LSTM, for example, consists of three gates – input gate, forget gate, and output gate – that regulate the flow of information through the network. The input gate controls how much new information is added to the cell state, the forget gate determines what information is discarded from the cell state, and the output gate decides what information is passed to the next time step.

By effectively managing the flow of information, gated architectures are able to retain relevant information over longer sequences, making them better suited for tasks that require capturing dependencies over extended periods of time. This is particularly important in applications such as speech recognition, where the context of a spoken sentence can span several seconds.

Furthermore, gated architectures have been shown to mitigate the vanishing and exploding gradient problems that often plague traditional RNNs. The gating mechanisms help to stabilize the training process by controlling the flow of gradients through the network, enabling more efficient learning of long-term dependencies.

In conclusion, gated architectures play a crucial role in improving long-term dependencies in recurrent neural networks. By incorporating gating mechanisms that regulate the flow of information, these architectures are able to capture and retain relevant information over extended sequences, making them well-suited for tasks that require modeling complex relationships over time. As research in this area continues to advance, we can expect further enhancements in the performance of RNNs for a wide range of applications.

#Role #Gated #Architectures #Improving #LongTerm #Dependencies #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply