Your cart is currently empty!

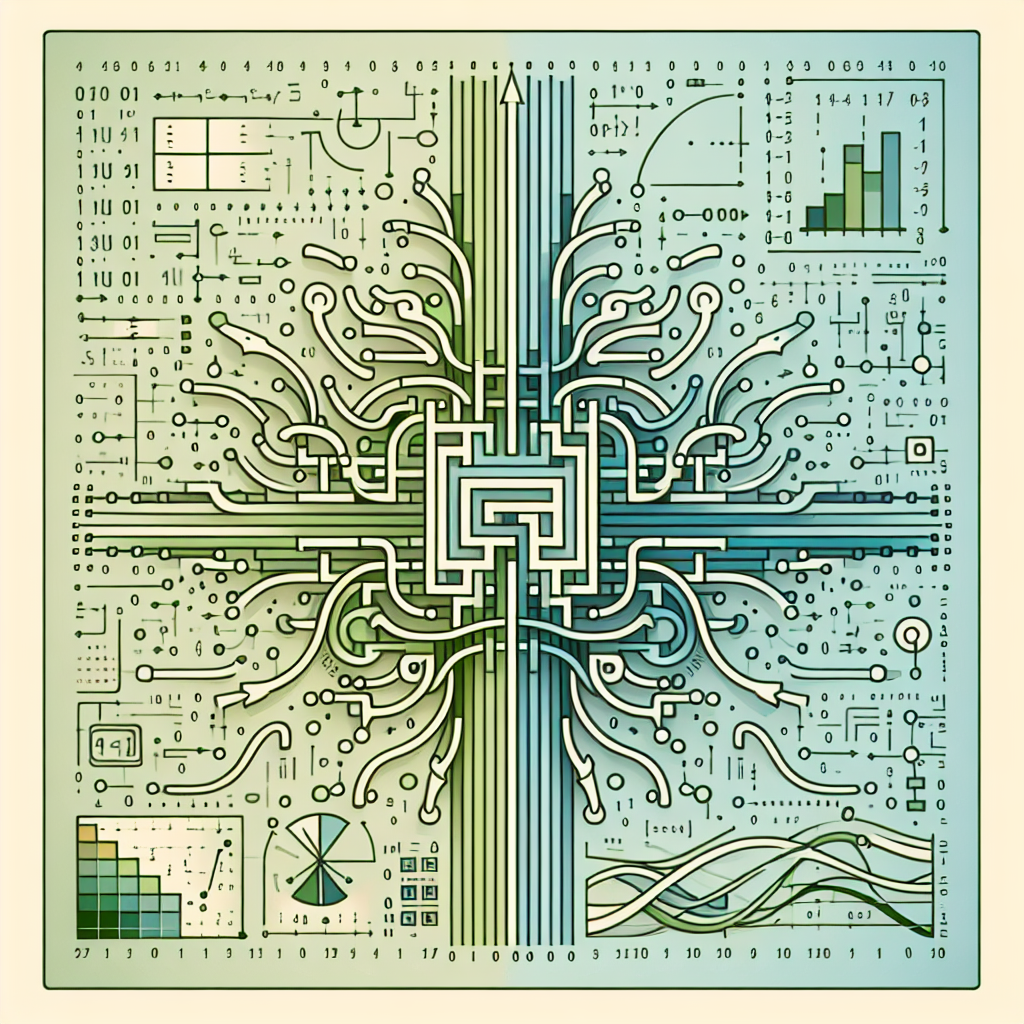

The Role of Gated Recurrent Units (GRUs) in RNNs

Recurrent Neural Networks (RNNs) have been widely used in natural language processing, speech recognition, and time series prediction tasks. One of the key components of RNNs is the ability to retain information over time through the use of hidden states. However, traditional RNNs suffer from the vanishing gradient problem, where gradients become very small as they are backpropagated through time, leading to difficulties in learning long-range dependencies.

To address this issue, researchers have developed a variant of RNNs called Gated Recurrent Units (GRUs). GRUs are a type of RNN architecture that have been designed to better capture long-range dependencies in sequences. They were introduced by Cho et al. in 2014 as a simplified version of the more complex Long Short-Term Memory (LSTM) units.

The key idea behind GRUs is the use of gating mechanisms to control the flow of information within the network. GRUs have two gates – an update gate and a reset gate. The update gate controls how much of the previous hidden state should be retained, while the reset gate determines how much of the previous hidden state should be forgotten.

By using these gating mechanisms, GRUs are able to effectively capture long-range dependencies in sequences while avoiding the vanishing gradient problem. This makes them particularly well-suited for tasks that involve processing sequences with long-term dependencies, such as machine translation, speech recognition, and sentiment analysis.

In addition to their effectiveness in capturing long-range dependencies, GRUs are also computationally efficient compared to LSTMs, making them a popular choice for researchers and practitioners working with RNNs.

Overall, the role of Gated Recurrent Units in RNNs is crucial for improving the performance of sequence modeling tasks. With their ability to capture long-range dependencies and avoid the vanishing gradient problem, GRUs have become an important tool in the field of deep learning and are likely to continue to play a significant role in the development of more advanced RNN architectures in the future.

#Role #Gated #Recurrent #Units #GRUs #RNNs,rnn

Leave a Reply