Your cart is currently empty!

The Role of Long Short-Term Memory (LSTM) in Recurrent Neural Networks

Long Short-Term Memory (LSTM) is a type of recurrent neural network (RNN) architecture that is designed to overcome the limitations of traditional RNNs when it comes to learning long-term dependencies in sequential data. In this article, we will explore the role of LSTM in RNNs and how it has revolutionized the field of deep learning.

RNNs are a type of neural network that is designed to process sequential data, such as time series data or natural language text. These networks have the ability to maintain a memory of previous inputs, which allows them to make predictions based on past information. However, traditional RNNs have difficulty in learning long-term dependencies, as the gradient signal can vanish or explode as it is propagated through the network.

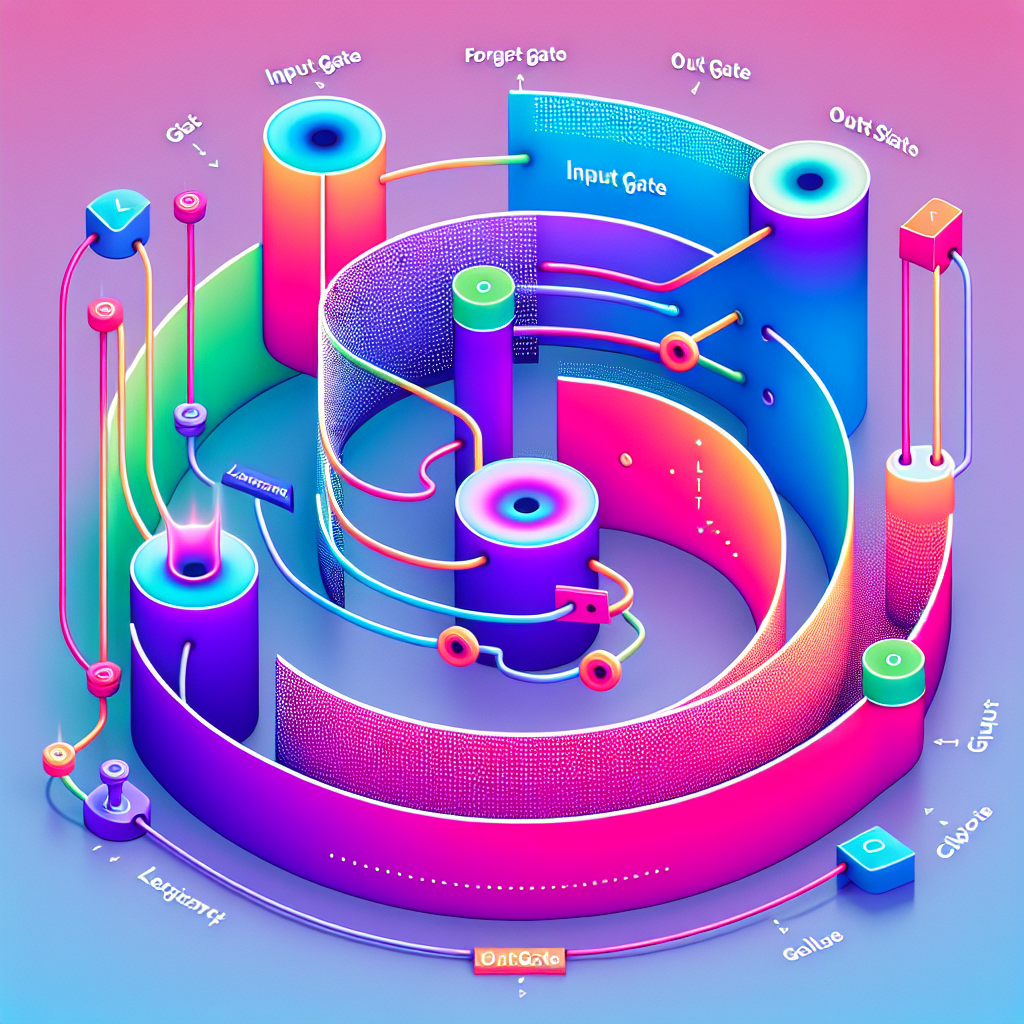

LSTM was proposed as a solution to this problem by Sepp Hochreiter and Jürgen Schmidhuber in 1997. The key innovation of LSTM is the introduction of a memory cell, which is responsible for storing and accessing information over long periods of time. The memory cell is controlled by three gates: the input gate, the forget gate, and the output gate. These gates regulate the flow of information into and out of the cell, allowing the network to selectively remember or forget information.

The input gate determines how much new information is stored in the cell, the forget gate controls which information is discarded from the cell, and the output gate decides how much information is outputted from the cell. This mechanism allows LSTM to learn long-term dependencies by remembering important information and discarding irrelevant information.

One of the main advantages of LSTM is its ability to capture long-term dependencies in sequential data. This makes it well-suited for tasks such as speech recognition, machine translation, and sentiment analysis, where the context of the input data is crucial for making accurate predictions.

Furthermore, LSTM has been shown to outperform traditional RNNs on a wide range of tasks, demonstrating its effectiveness in learning complex patterns in sequential data. Its ability to handle vanishing and exploding gradients has made it a popular choice for many deep learning applications, leading to its widespread adoption in the research community.

In conclusion, LSTM plays a crucial role in recurrent neural networks by enabling them to learn long-term dependencies in sequential data. Its innovative memory cell architecture has revolutionized the field of deep learning, allowing researchers to tackle a wide range of challenging tasks with unprecedented accuracy and efficiency. As the field continues to evolve, LSTM is expected to remain a key component in the development of advanced neural network architectures.

#Role #Long #ShortTerm #Memory #LSTM #Recurrent #Neural #Networks,rnn

Leave a Reply