Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Understanding Long Short-Term Memory (LSTM) Networks: A Comprehensive Guide

In recent years, deep learning has revolutionized the field of artificial intelligence, leading to breakthroughs in tasks such as image recognition, natural language processing, and speech recognition. One of the key components of deep learning is the Long Short-Term Memory (LSTM) network, a type of recurrent neural network that is particularly effective at capturing long-term dependencies in sequential data.

In this comprehensive guide, we will explore the inner workings of LSTM networks, their advantages over traditional recurrent neural networks, and how they can be applied to various real-world tasks.

What is an LSTM network?

An LSTM network is a type of recurrent neural network (RNN) that is designed to address the vanishing gradient problem, which occurs when gradients become exponentially small as they are backpropagated through many layers of a neural network. This problem makes it difficult for traditional RNNs to learn long-term dependencies in sequential data.

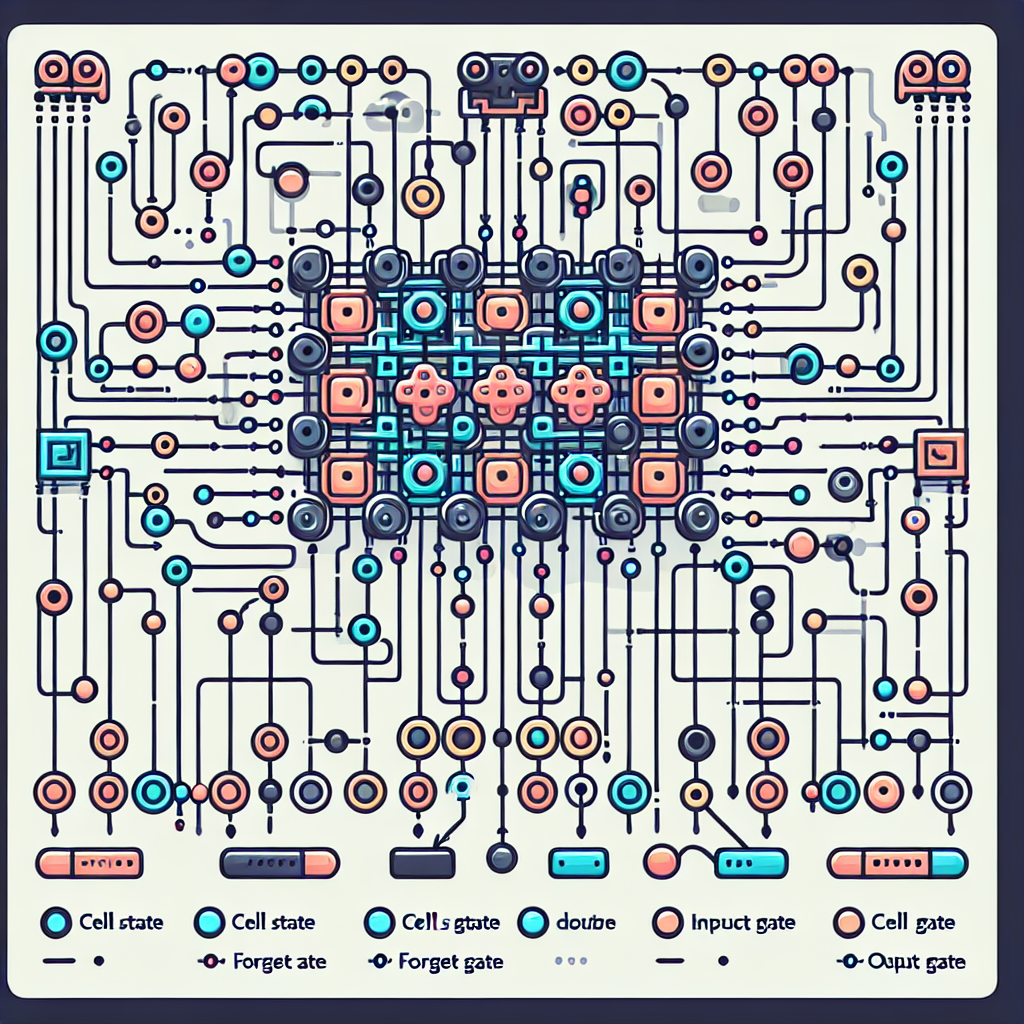

LSTM networks solve this problem by introducing a memory cell that can store information over long periods of time. The key components of an LSTM network include an input gate, a forget gate, an output gate, and a cell state, which together allow the network to selectively update and access the memory cell at each time step.

Advantages of LSTM networks

One of the main advantages of LSTM networks is their ability to capture long-term dependencies in sequential data. This makes them well-suited for tasks such as language modeling, speech recognition, and time series prediction, where the input data is structured as a sequence of values.

Another advantage of LSTM networks is their ability to learn complex patterns in sequential data, even when the data is noisy or contains missing values. This makes them robust to variations in the input data and allows them to generalize well to new, unseen examples.

Applications of LSTM networks

LSTM networks have been successfully applied to a wide range of tasks in natural language processing, speech recognition, and time series analysis. Some common applications of LSTM networks include:

– Language modeling: LSTM networks can be used to generate text, predict the next word in a sentence, or classify the sentiment of a piece of text.

– Speech recognition: LSTM networks can be used to transcribe spoken language into text, identify speakers, or detect speech disorders.

– Time series prediction: LSTM networks can be used to forecast future values in a time series, such as stock prices, weather data, or sensor readings.

Conclusion

In conclusion, LSTM networks are a powerful tool for capturing long-term dependencies in sequential data. Their ability to learn complex patterns and generalize well to new examples makes them well-suited for a wide range of tasks in artificial intelligence. By understanding the inner workings of LSTM networks and how they can be applied to real-world problems, researchers and practitioners can unlock the full potential of deep learning in their work.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Understanding #Long #ShortTerm #Memory #LSTM #Networks #Comprehensive #Guide,lstm

Leave a Reply

You must be logged in to post a comment.