Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) have gained popularity in recent years for their ability to handle sequential data and time series analysis. In this article, we will provide a comprehensive overview of RNNs, including their architecture, training process, and applications.

Architecture of RNNs

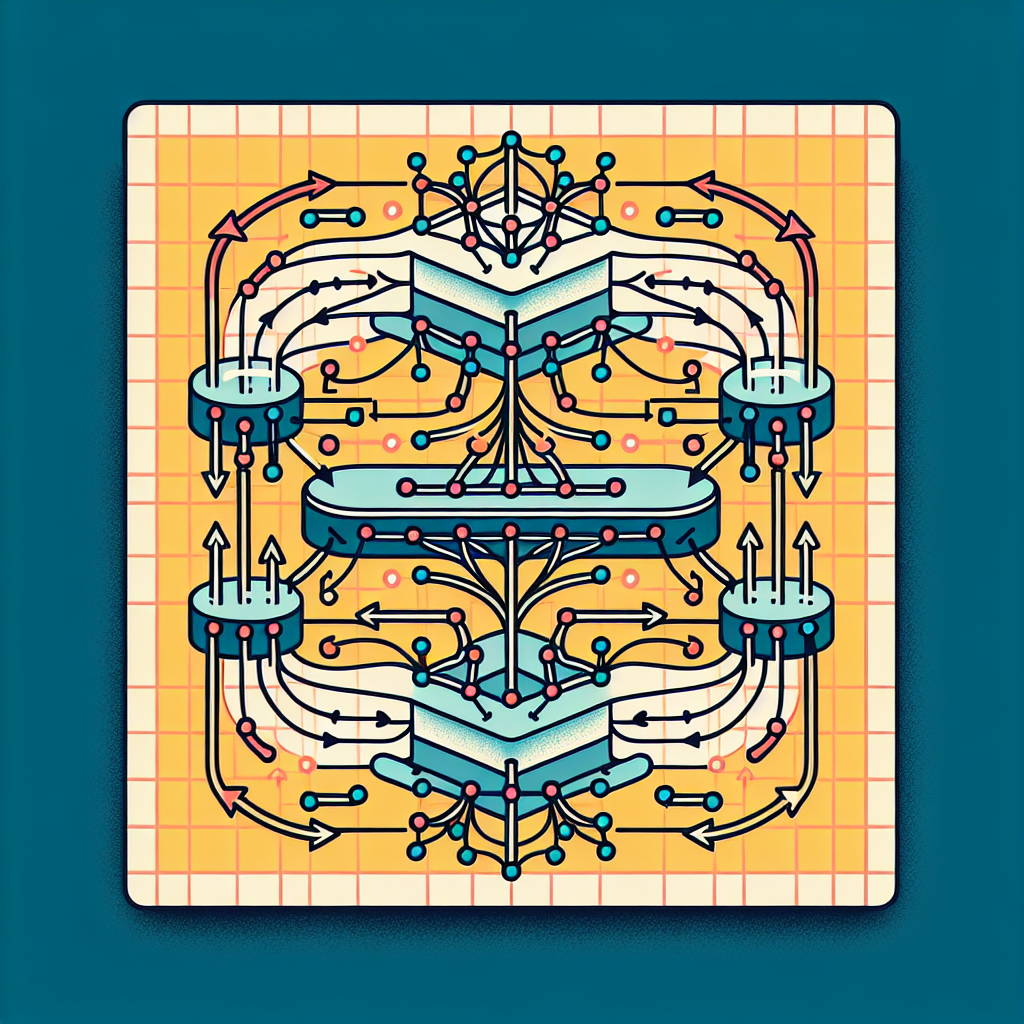

RNNs are a type of neural network that has connections between nodes that form a directed cycle. This allows information to persist over time and be passed from one time step to the next. The basic building block of an RNN is the recurrent neuron, which has an internal state that is updated at each time step based on the input and the previous state.

The most common architecture of an RNN is the Long Short-Term Memory (LSTM) network, which includes gates that control the flow of information and prevent the vanishing gradient problem. The gates in an LSTM network include the input gate, forget gate, and output gate, which regulate the flow of information into and out of the cell state.

Training Process of RNNs

Training an RNN involves feeding sequential data into the network and updating the weights based on the error between the predicted output and the ground truth. The backpropagation through time (BPTT) algorithm is commonly used to update the weights in an RNN by calculating the gradients of the loss function with respect to each weight.

One of the challenges of training RNNs is the vanishing gradient problem, where the gradients become very small and cause the network to learn slowly or not at all. This issue can be mitigated by using techniques such as gradient clipping, which limits the size of the gradients, or by using more sophisticated architectures like LSTM or Gated Recurrent Unit (GRU) networks.

Applications of RNNs

RNNs have been successfully applied to a wide range of tasks, including natural language processing, speech recognition, and time series forecasting. In natural language processing, RNNs can be used for tasks such as language modeling, machine translation, and sentiment analysis. In speech recognition, RNNs can be used to transcribe audio recordings into text.

In time series forecasting, RNNs can be used to predict future values based on past observations. This is particularly useful in finance for predicting stock prices or in weather forecasting for predicting temperature and precipitation. RNNs can also be used for anomaly detection in time series data, where they can identify unusual patterns or outliers.

In conclusion, RNNs are a powerful tool for handling sequential data and time series analysis. Their ability to retain information over time makes them well-suited for a wide range of applications in fields such as natural language processing, speech recognition, and time series forecasting. By understanding the architecture, training process, and applications of RNNs, researchers and practitioners can harness the full potential of these versatile neural networks.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Understanding #Recurrent #Neural #Networks #Comprehensive #Overview,rnn

Leave a Reply

You must be logged in to post a comment.