Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

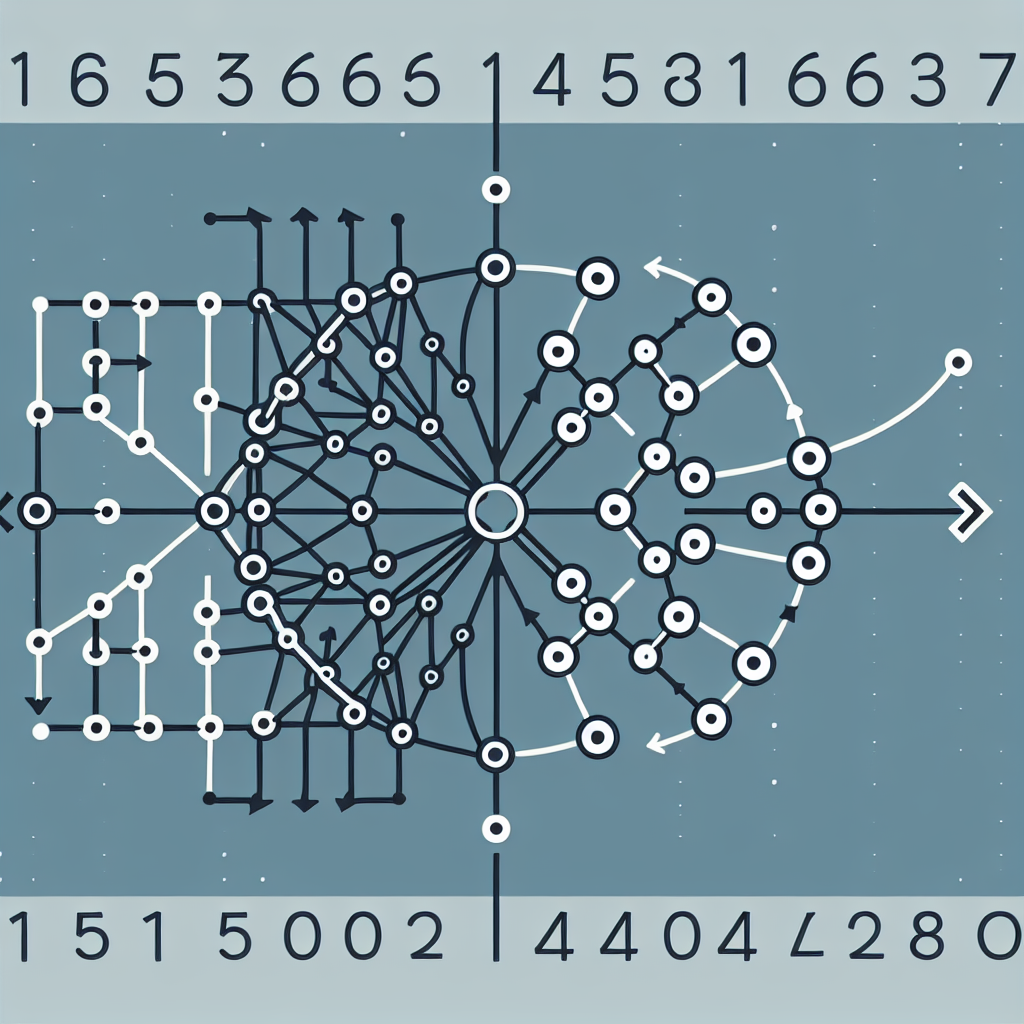

In recent years, recurrent neural networks (RNNs) have gained significant attention in the field of machine learning and artificial intelligence. These powerful models are designed to handle sequential data, making them particularly effective for tasks such as natural language processing, time series analysis, and speech recognition.

One of the key advantages of RNNs is their ability to capture dependencies and patterns in sequential data. Unlike traditional feedforward neural networks, which process each input independently, RNNs have a feedback loop that allows them to store information about previous inputs and use it to make predictions about future inputs. This makes them well-suited for tasks that involve sequences of data, where the order of the inputs is important.

Another important feature of RNNs is their ability to handle variable-length sequences. This flexibility allows them to work with data of different lengths, making them suitable for a wide range of applications. For example, RNNs can be used to generate text, predict stock prices, or analyze the sentiment of social media posts.

Despite their potential, RNNs can be challenging to train and optimize. One common issue is the vanishing gradient problem, where gradients become extremely small as they are backpropagated through the network, leading to slow learning and poor performance. To address this issue, researchers have developed variations of RNNs, such as long short-term memory (LSTM) networks and gated recurrent units (GRUs), which are specifically designed to handle long sequences and mitigate the vanishing gradient problem.

Overall, RNNs have the potential to revolutionize the way we analyze sequential data. By leveraging their ability to capture dependencies in sequences and handle variable-length inputs, RNNs can unlock valuable insights from a wide range of applications. As researchers continue to explore new architectures and techniques for training RNNs, we can expect to see even more breakthroughs in sequential data analysis in the years to come.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Unleashing #Potential #Recurrent #Neural #Networks #Sequential #Data #Analysis,rnn

Leave a Reply

You must be logged in to post a comment.