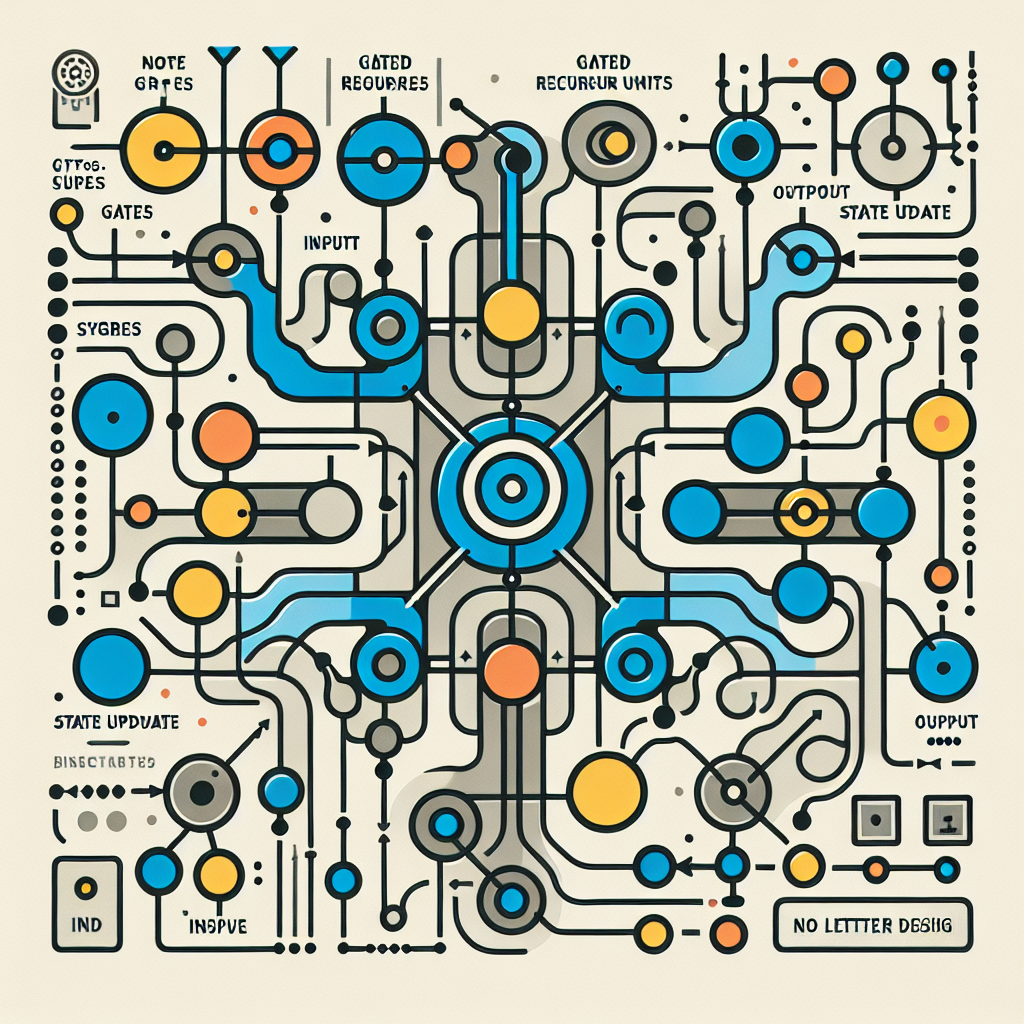

Gated Recurrent Units (GRUs) are a type of neural network architecture that has gained popularity in recent years for its effectiveness in handling sequential data. In this article, we will delve into the inner workings of GRUs and explore how they work.

GRUs are a type of recurrent neural network (RNN) that are designed to address the vanishing gradient problem that can occur in traditional RNNs. The vanishing gradient problem occurs when the gradients during training become very small, making it difficult for the network to learn long-term dependencies in the data. GRUs address this issue by using gating mechanisms to control the flow of information through the network.

The key components of a GRU are the reset gate and the update gate. The reset gate determines how much of the previous hidden state to forget, while the update gate determines how much of the new hidden state to keep. These gates allow the GRU to selectively update its hidden state based on the input data, enabling it to learn long-term dependencies more effectively.

One of the advantages of GRUs over traditional RNNs is their ability to capture long-term dependencies in the data while avoiding the vanishing gradient problem. This makes them well-suited for tasks such as language modeling, speech recognition, and machine translation, where understanding sequential patterns is crucial.

In addition to their effectiveness in handling sequential data, GRUs are also computationally efficient compared to other types of RNNs such as Long Short-Term Memory (LSTM) networks. This makes them a popular choice for researchers and practitioners working with sequential data.

Overall, GRUs are a powerful tool for modeling sequential data and have become an essential component of many state-of-the-art neural network architectures. By understanding the inner workings of GRUs and how they address the challenges of traditional RNNs, researchers and practitioners can leverage their capabilities to build more effective and efficient models for a wide range of applications.

#Unveiling #Workings #Gated #Recurrent #Units #GRUs,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.